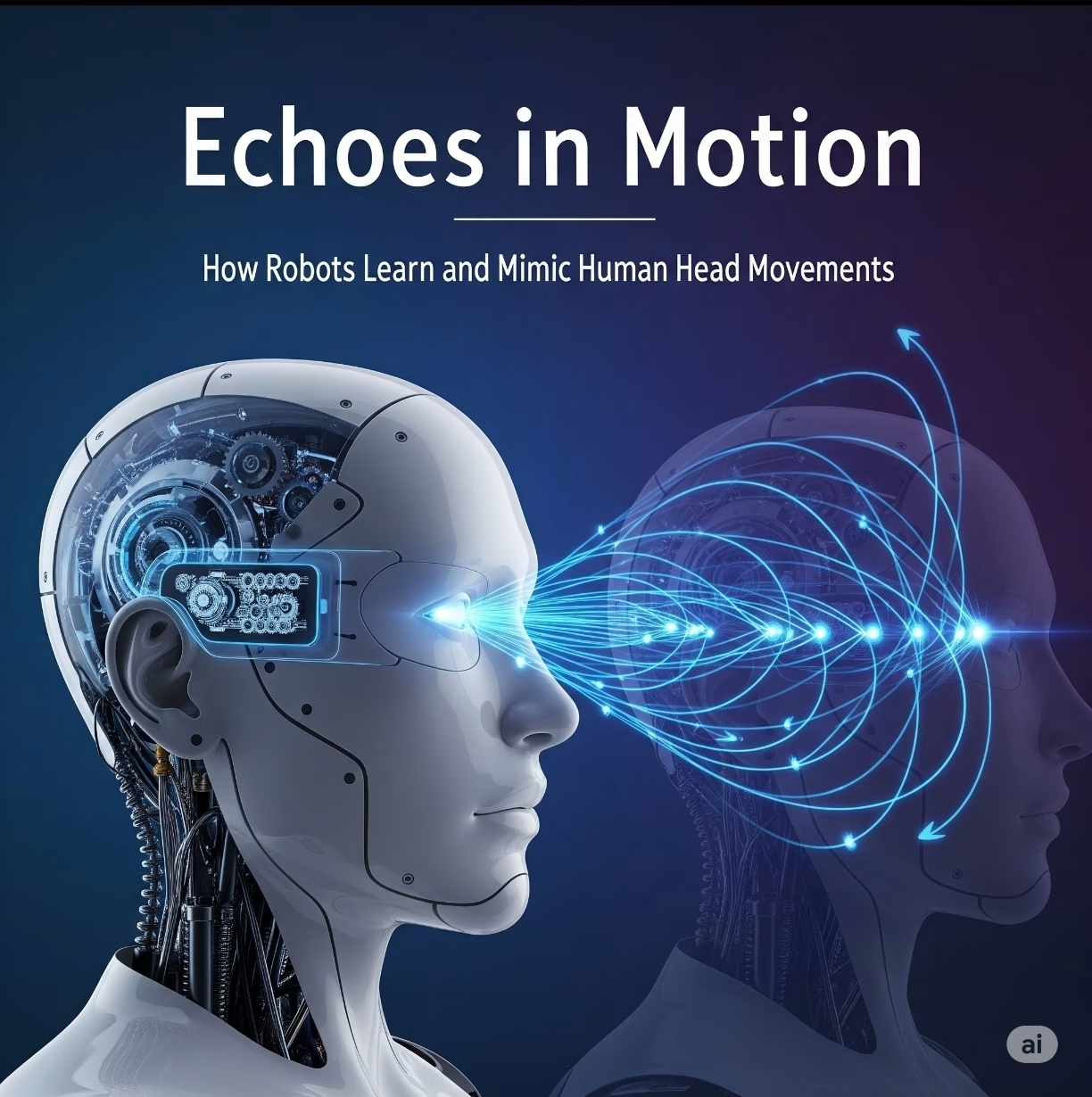

In robotics, one of the most fascinating advancements is the ability of humanoid robots to imitate human-like head movements. These subtle yet expressive gestures play a key role in communication, perception, and human-robot interaction. But how exactly do robots achieve this?

🤖 The Importance of Head Movements in Robots

Head movements are critical in:

- Showing attention – turning the head towards a speaker

- Expressing emotion – nodding, tilting, or shaking

- Supporting communication – complementing verbal language

- Tracking objects – visually or sensor-based following

For social robots, head motion is not just mechanical; it conveys intent and personality.

🧩 How Do Robots Learn Head Movements?

There are three primary approaches to enabling head movement in robots:

1. Manual Programming

Robots are given specific angular values for servo motors controlling the neck:

# Example in Python for a servo-controlled head

servo_pan.write(90) # center

servo_tilt.write(70) # slightly up

This method is simple but lacks adaptability.

2. Imitation Learning

The robot uses sensors (camera, gyroscope, etc.) to observe human head motion, often with machine learning models that track facial keypoints.

Steps:

- Detect head position with computer vision (e.g., MediaPipe, OpenCV)

- Translate it into servo/motor commands

- Store and generalize the movement pattern

3. Reinforcement Learning (RL)

More advanced robots use RL to optimize head movements based on feedback from interaction:

- E.g., if a user reacts better when the robot looks directly at them, it learns to repeat that.

🔧 Hardware Behind Head Motion

Most humanoid robots use:

- Servo motors (typically 2–3 degrees of freedom)

- Gyroscopes/IMUs to stabilize or replicate motion

- Camera modules for vision-based tracking

- Neck brackets and gear joints to provide smooth motion

Popular robot platforms like NAO, Pepper, or Spot have sophisticated head movement systems.

🧠 AI Techniques Involved

- Facial landmark detection – used to track head pose

- Pose estimation models – estimate 3D angles of head

- Inverse kinematics – calculate joint angles for desired head position

- LSTM or Transformer models – used in advanced robots for dynamic gesture generation

Image credit: ATR / Wikimedia Commons

Caption: A humanoid robot capable of interactive head movements.

🧪 Future Applications

- Autism therapy robots using facial expressions

- Retail/guide robots adapting gaze to attract attention

- Robotic pets that mimic user head gestures for bonding

💬 Final Thoughts

Robots with human-like head movement open doors to natural, engaging, and emotionally intelligent interactions. They bridge the gap between humans and machines—not just through functionality, but through expression.

👥 Share your thoughts in the comments and join the discussion on ROBOFORUM – the community for future-minded thinkers in robotics and AI!

Leave a comment and join the discussion. If you’re not already subscribed, please subscribe or log in.

Share your thoughts in the comments and join the discussion on ROBOFORUM – the community for future-minded thinkers in robotics and AI!

Leave a comment and join the discussion. If you’re not already subscribed, please subscribe or log in.